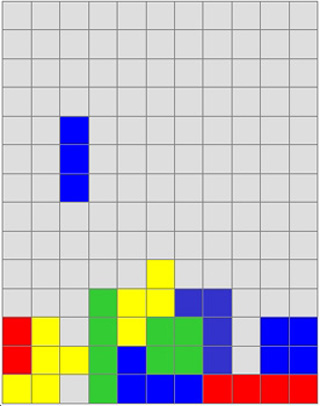

Tetris game strategies can be analyzed using the value function approximation techniques described in this course -- see lecture session 10. (Illustration courtesy of MIT OpenCourseWare.)

Instructor(s)

Prof. Daniela Pucci De Farias

MIT Course Number

2.997

As Taught In

Spring 2004

Level

Graduate

Course Description

Course Features

Course Description

This course is an introduction to the theory and application of large-scale dynamic programming. Topics include Markov decision processes, dynamic programming algorithms, simulation-based algorithms, theory and algorithms for value function approximation, and policy search methods. The course examines games and applications in areas such as dynamic resource allocation, finance and queueing networks.